James-Stein Gradient Combiner for Inverse Monte Carlo Rendering

Gwangju Institute of Science and Technology

ACM SIGGRAPH 2025 Conference Proceedings

Abstract

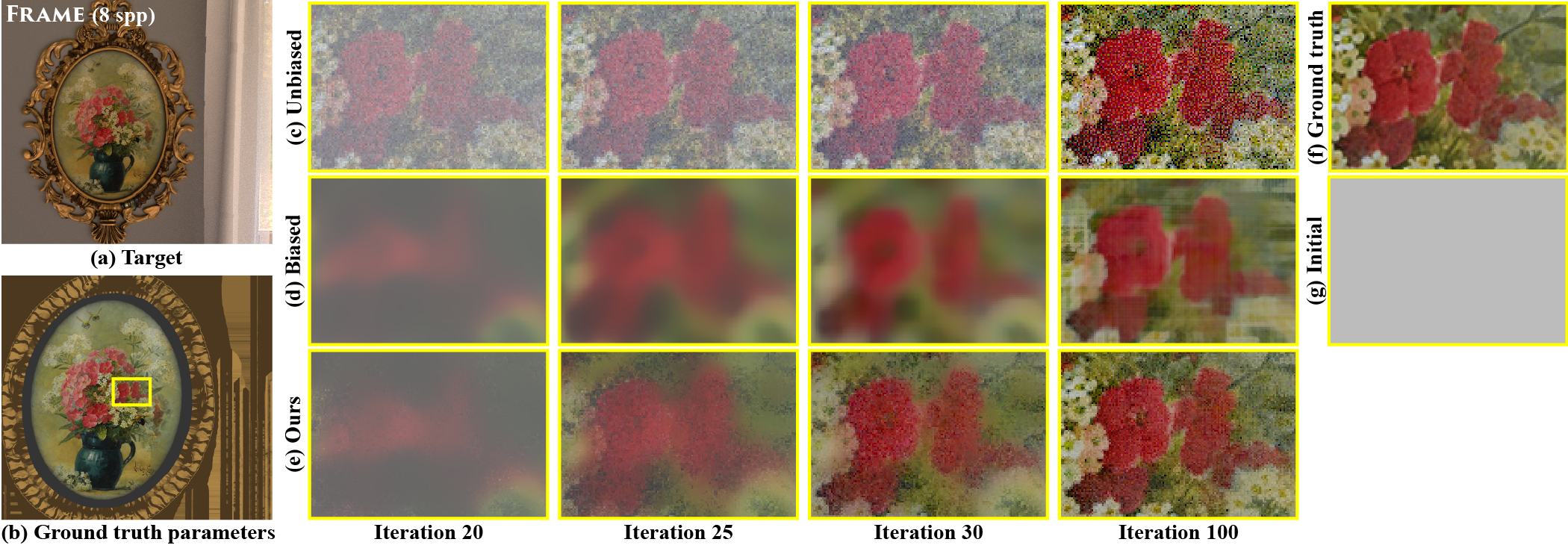

Inferring scene parameters such as BSDFs and volume densities from user- provided target images has been achieved using a gradient-based optimiza- tion framework, which iteratively updates the parameters using the gradient of a loss function defined by the differences between rendered and target images. The gradient can be unbiasedly estimated via a physics-based ren- dering, i.e., differentiable Monte Carlo rendering. However, the estimated gradient can become noisy unless a large number of samples are used for gradient estimation, and relying on this noisy gradient often slows opti- mization convergence. An alternative is to exploit a biased version of the gradient, e.g., a filtered gradient, to achieve faster optimization convergence. Unfortunately, this can result in less noisy but overly blurred scene pa- rameters compared to those obtained using unbiased gradients. This paper proposes a gradient combiner that blends unbiased and biased gradients in parameter space instead of relying solely on one gradient type (i.e., unbiased or biased). We demonstrate that optimization with our combined gradient enables more accurate inference of scene parameters than using unbiased or biased gradients alone.