Neural James-Stein Combiner for Unbiased and Biased Renderings

Gwangju Institute of Science and Technology 1 , University of A Coruña - CITIC 2

ACM Transactions on Graphics (SIGGRAPH Asia 2022)

Received Best Paper Award at SIGGRAPH Asia 2022

Abstract

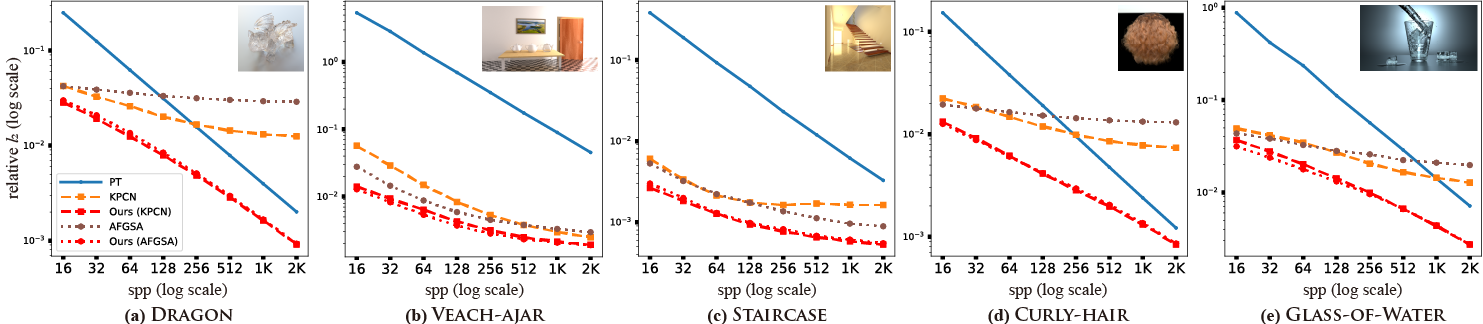

Unbiased rendering algorithms such as path tracing produce accurate images given a huge number of samples, but in practice, the techniques often leave visually distracting artifacts (i.e., noise) in their rendered images due to a limited time budget. A favored approach for mitigating the noise problem is applying learning-based denoisers to unbiased but noisy rendered images and suppressing the noise while preserving image details. However, such denoising techniques typically introduce a systematic error, i.e., the denoising bias, which does not decline as rapidly when increasing the sample size, unlike the other type of error, i.e., variance. It can technically lead to slow numerical convergence of the denoising techniques. We propose a new combination framework built upon the James-Stein (JS) estimator, which merges a pair of unbiased and biased rendering images, e.g., a path-traced image and its denoised result. Unlike existing post-correction techniques for image denoising, our framework helps an input denoiser have lower errors than its unbiased input without relying on accurate estimation of per-pixel denoising errors. We demonstrate that our framework based on the well-established JS theories allows us to improve the error reduction rates of state-of-the-art learning-based denoisers more robustly than recent post-denoisers.

Contents

Errata

The computational overhead of a previous denoising method (AFGSA) was reported as 0.01 sec in the paper, but it should be 2.4 sec and 2.1 sec for 1024 x 1024 and 1280 x 720 images, respectively.